AI Auditing

Kontent.ai

Audit and tracking system design for AI actions in enterprise CMS – implementing the Accountability principle from the Responsible AI Framework to provide clear visibility into AI vs. human actions.

📋 Context

Implementation of the Accountability principle defined in Responsible AI Framework. Users and administrators need clear visibility into what actions were performed by AI agents versus human users.

🧩 Key Design Challenge

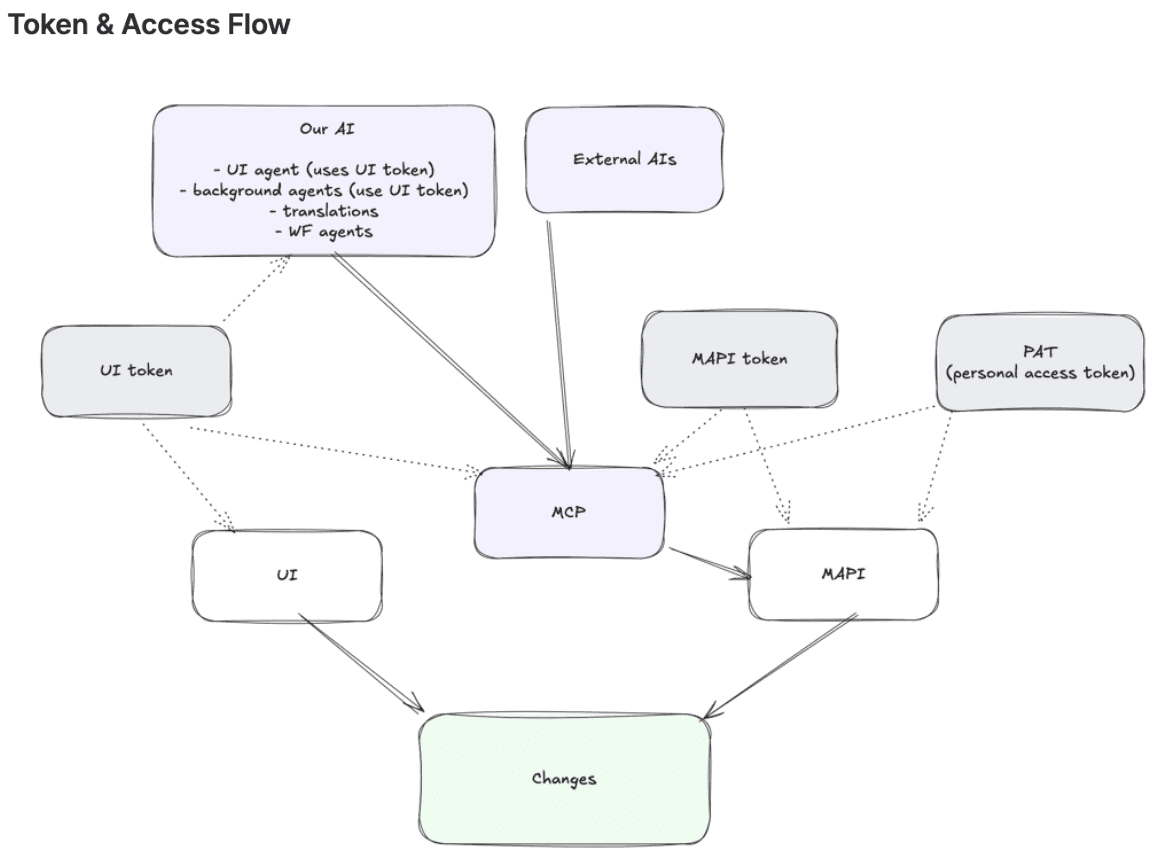

Multi-source information flow from various AI integration points.

- External AI integrations (third-party LLMs via API)

- Internal AI features (Ask AI, WYSIWYG AI)

- Our own AI Agent

- Workflow Agents (automated triggers)

💡 Proposed Design Solutions

Phased approach to implementing AI auditing capabilities.

- V1 - Audit Log Enhancement – Expand 'Source' field with values like 'AI Agent' or 'MCP'

- V1 - Version History Update – Add 'on behalf' information showing which agent made changes

- V1 - Extended Filters – Add AI-specific filter to audit log

- V2 - Unified View – Include version history information directly in audit log

- V2 - Content Items Filter – Enable filtering by content item changes

❓ Open Questions

Design decisions requiring further exploration and stakeholder input.

- How to track external LLM usage transparently?

- Should internal AI features (Ask AI) be distinguished separately from AI Agent?

- Workflow Agents: Who gets attribution when trigger person ≠ configuration person?

- How to handle approved AI suggestions (user approved = user action?)

🌊 Impact

Proper AI auditing builds trust in AI-powered features for enterprise customers with strict compliance requirements, making AI adoption viable for regulated industries.